Last Saturday (October 24, 2015) was SF Bay ACM's annual Data Science Camp Silicon Valley. The venue was the Town Hall in PayPal's intergalactic headquarters on 2161 North 1st Street in San Jose, nestled between eBay and Apple's future Car Division, just one block from Comet, Foveon, Lumileds, Peaxy, and Toyota's research lab.

From the voting on the sessions, it appears that the event attracted data scientists from all over the US, with a large number of participants people who had taken the Coursera classes on big data, machine learning, and data science and were now wondering how to progress from Coursera to a job (82 votes). As Sara Kalisin from Intel noted, when companies try out analytics, they do not really know what to do with the result and end up not staffing the project because the benefit is less than the employee's salary. In addition to the session on "Coursera to job," Sara also led a session with title "How to showcase data

science impact" (15 votes).

At the beginning of the day, Joseph Rickert and Robert Horton of Microsoft gave the tutorial "Introduction to R for Machine Learning." R, which goes back to Bell Labs in 1976, has become the most widely used data analysis software. It is undergoing an astonishing growth and today has about 36,820 functions. The CRAN repository has a solution for almost all data analysis problems.

Originally, R was a statistical package with statistical tools acting on observations stored in arrays. There were different packages, like Weka, for mining large data sets stored in files with machine learning to classify patterns and make predictions. However, today R has all the machine learning functionality on top of the original statistical tools. This has been possible because today a serious workstation has at least 64 GB of RAM, which allows to store big data in arrays.

When the data is too large to fit in memory, it can be partitioned into blocks which can be processed sequentially or in parallel. However, this capability is not available with the free version of R and requires the expensive commercial enterprise version. Robert Horton announced that SQL Server 2016 will support the server-side execution of R functions. This means that the data no longer will have to be moved across the network for analysis.

After the sponsored lunch, John Park from HP led a double session with title "Malware Classification +

ML + Crowd Sourcing" (46+44 votes). The amount of malware injected every day is mind-boggling. He uses an algorithm called Nilsimsa Hash on the binary files and uses natural language processing and classifiers trained through crowd-sourcing to find the malware.

Another very popular session with the title "I have data. Now What? IOT wearable space in life sciences. How to analyze the data for individual users. How do we build that" (117 votes) was led by Lee Courtney from Qurasense. Representatives from large international financial institutions and the health care industry participated in this interactive session. The only company permanently storing all data and continuously mining all of it was a manufacturer of set-top boxes for the cable industry.

For everybody else, storing the data was just too dangerous because of the flood of malware, while Hadoop has no security. This requires ingesting the data, mining it, then deleting it. Because the HDFS data ingest is very slow and each file must be stored in three copies, as little data as possible is preserved. At the end of the session, Lee Courtney summarized the top three unsolved problems for big data as

- no security

- no security

- poor ingest performance

As a note, there are file systems with excellent security and supporting HDFS. They have a POSIX interface, so it is not necessary to move the data at all.

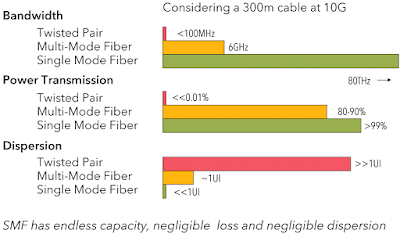

Moving the data is a big problem that will not go away, as illustrated by this figure by DataCore. Until about eight years ago, processors kept getting faster. However, when the wall of physical transistor shrinking was hit, the clock rates actually became slower to control the heat generation and allow for more cores on each die. With the increasing number of cores—for example, a typical workstation processor has eight cores each with two hyperthreads, for a total of 16 cores—less IO bandwidth is available to each CPU: the number of pins on the chip is still the same, and there is a growing IO gap.

Some people believe that cloud computing and virtual machines solve the problem. However, for storage this is an illusion. Indeed, according to the Hennessy/Patterson rules of thumb, for general computing the utilization rate is about 0.20–0.25 [HOHY14]. For storage servers, the utilization rate is 0.6–0.8 [AFG+10], therefore statistical multiplexing is less useful because with the OS and hypervisor overheads a processor is maxed out. The IO gap comes on top of this!

[AFG+10] Michael Armbrust, Armando Fox, Rean Griffith, Anthony D Joseph, Randy Katz, Andy Konwinski, Gunho Lee, David Patterson, Ariel Rabkin, Ion Stoica, et al. A view of cloud computing. Communications of the ACM, 53(4):50–58, 2010.

[HOHY14] Md. Iqbal Hossain (Older) and Md. Iqbal Hossain (Younger). Dynamic scaling of a web-based application in a cloud architecture. Master’s thesis, School of Information and Communication Technology, KTH Royal Institute of Technology, Stockholm, 2014.