Last weekend, as like an astronaut in a Mercury capsule I sat strapped in a small seat in a metal tube being flung across the Atlantic and Canada's Northern Territories, I was reading the day's press from both sides of the Atlantic to catch up with the last two weeks of news and get an appreciation of the reality field's distortions.

On both sides of the Atlantic, physicists made first page news, but for very different reasons, as you would expect in a Riemannian reality field. In the US newspaper, a journalist had been chasing so-called financial geniuses in New York and London to get the rap on $700 billion of toxic financial papers. In the European newspapers the story was on page four, with the question of why the US Government was talking about $700 billion when the actual amount of toxic paper was $3,500 billion, or $3,500,000,000,000.00.

Anyway, that is what you get with reality distortion, but it was not what caught my attention. The journalists were not able to get any financial genius to speak on the record, so they reported remarks from both sides of the Atlantic stating that the financial instruments were so complex that there was no way they (the geniuses) could understand them, that is why they hired quantum mechanics physicists to cook up risk models.

So, there it was written black on white: the quantum mechanics physicists are to blame for the $3,500 billion toxic papers. Hmm, and I thought the only toxic paper quantum physicists handle is that in the litter box of Schrödinger's cat. And they can even not known if the cat is dead or alive.

The story about the quantum physicists would have been more believable, if they had written the $3,500 billion disappeared in a black hole when the Large Hadron Collider (LHC) was turned on in Geneva (see this article on page 1291 of Science magazine of 5 September 2008).

That is what I read in the US newspapers. In the European newspapers physicists made the first page for completely different reasons. The first reason was the LHC. There had been some apprehension about black holes, but the operation start on 10 September was a full success. Unfortunately, over a week later, a possible faulty electrical connection between two of the accelerator’s magnets caused a large helium leak into sector 3-4, moving the start of the experiments to March 2009.

What the newspapers explained in some detail, was how beneficial the $8 billions spent on the LHC was for European industry, because it spurred a large amount of new technology in fields like superconductors and low-temperature materials. While I was reading this, I thought, wow, $8 << $3,500 billion. We could have had our own supercollider in Texas for only the bonuses of one bank in one year!?

The second front page news related to physics in European newspapers was Zhai Zhigang's space walk and the impact the development of the Shenzhou 7 capsule and its launching technology had on Chinese industry, leading it to develop more advanced technologies.

As a whole, from a European perspective, quantum physics and rocket science are not as bad as it is believed to be on this side of the Atlantic. From an international point of view, that had already been decided in the Nüremberg trials, which lets me continue with the meat of this post without shame.

It did not make the newspapers, but last week our paper on experiments supporting the concept of a g(2)-camera was published. If your institution does not subscribe to SPIE's Digital Library, you can buy it for only $18.00 (those are plain dollars, not billions).

Recent experiments have reported the Bose-Einstein condensation (BEC) phase transition for exciton-polariton systems in a semiconductor microcavity. The macroscopic quantum degeneracy is typically detected by probing the statistical properties of light emitted from a microcavity, under the presumption that the statistics of the exciton polaritons are faithfully transferred to the emanating photons.

The macroscopic quantum degeneracy can be established by measuring the correlations viz., first-order in the electric fields:

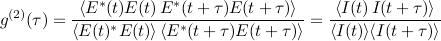

and seconds-order in the electric fields:

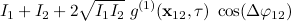

Moreover, it has been assumed that observation of the interference fringes similar to those in Michelson or Young interferometers is sufficient to establish the fact of macroscopic coherence in exciton-polariton systems. Two points on the wave front separated by a distance x12 produce an intensity pattern

such that the fringe visibility measures the magnitude of the first-order correlation function g(1)(x12, τ). But simply measuring this quantity alone is ambiguous because a coherent light source (e.g., a photon laser or decaying polariton BEC) can exhibit the same first-order correlations as a chaotic (or thermal) light source (e.g. Hg-Ar discharge lamp). The table below shows that proper disambiguation of a coherent state also requires measurement of the second-order correlation function

associated with intensity noise correlations. Here, I1,2(t) is the light intensity at a point ±½ x12 and time t.

Maximal values of respective correlation functions for incoherent, coherent and thermal light states

correlation function |

photon states |

incoherent |

coherent |

chaotic |

g(1)(x, 0) |

0 |

1 |

1 |

g(2)(x, 0) |

1 |

1 |

2 |

∆g(2)(x, 0) |

0 |

0 |

1 |

The minimal condition to confirm the BEC phase transition in a polariton system then becomes

Our imager detects the spatial correlation excess shown as ∆g(2) ≡ g(2)(x, 0) – 1 in the third row of the table above.

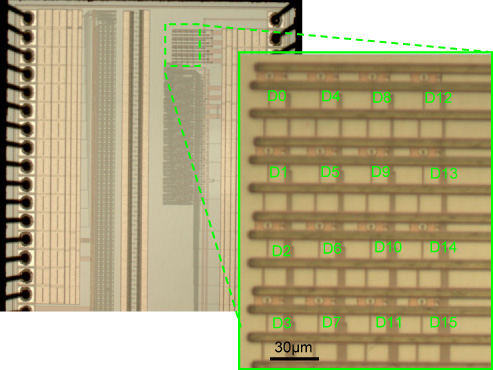

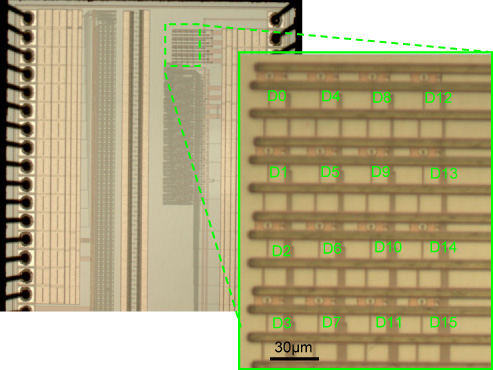

In our paper, we present a novel g(2)-imager built with conventional CMOS technology, which is capable of measuring second-order spatio-temporal correlated photons and thereby offers an important means for verifying the existence of a BEC state of cavity exciton polaritons.

One potential limitation when imaging BECs with our device is the requirement that ∆g(2) = 0, which corresponds to a null measurement. For BEC detection, however, we anticipate that a more practical device could combine conventional g(1)-imaging with g(2)-imaging, either as the same camera operated in two distinct modes or as two distinct cameras working together.

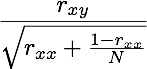

Future work will include the development of larger arrays of SPADs, the integration of on-chip data processing based on equation

and the extension to other g(2)-imaging applications.

A surprising feature of the g(2)-camera is that the parallelism of the sensor stemming from using N detectors does not scale linearly but binomially. For example with a 4 x 4 SPAD array all 16 detectors have separate parallel outputs so that (162) = 120 simultaneous pairwise measurements are possible.

You can get the full paper from this link: http://spie.org/x648.xml?product_id=795166.